Beyond Proxies: Why Enterprises Need a Unified AI Gateway

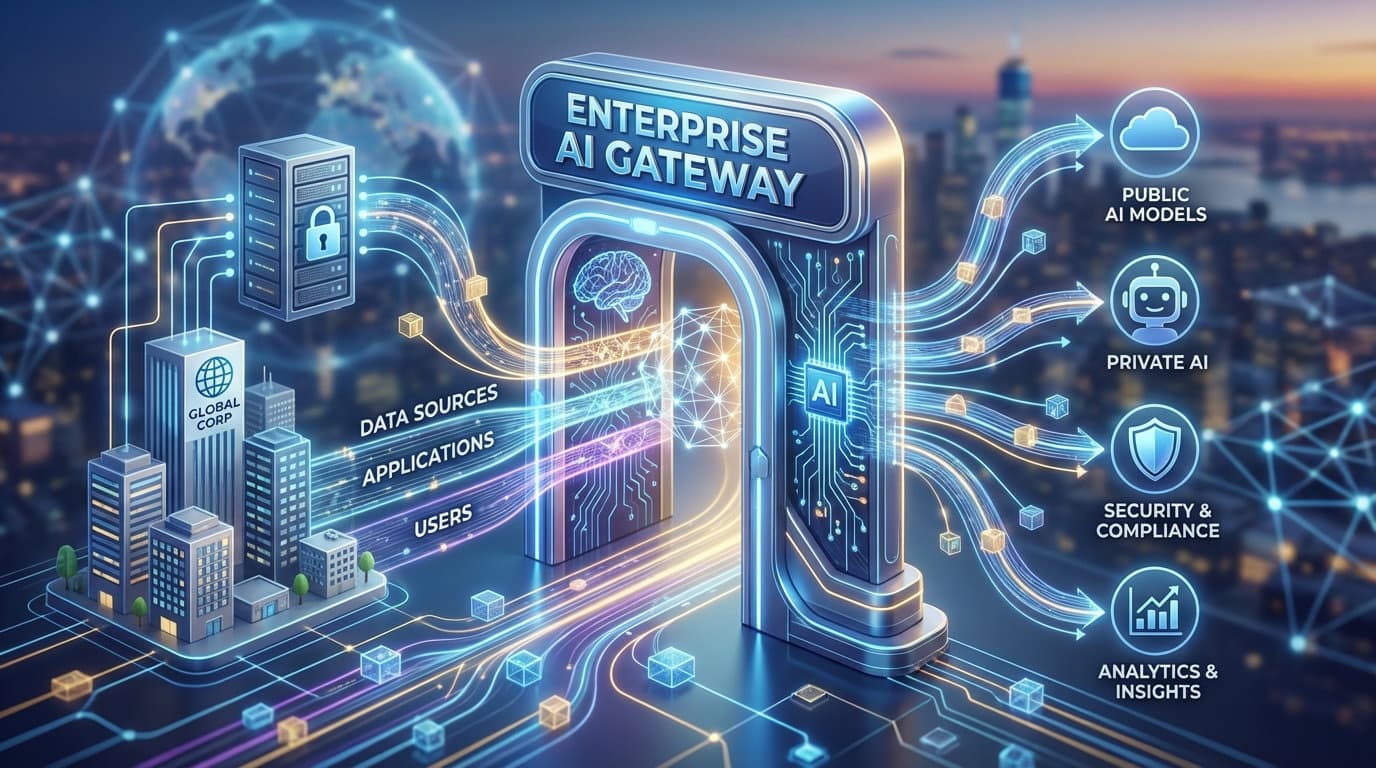

Learn why simple LLM proxies aren't enough and how a unified AI gateway delivers centralized access control, cost visibility, compliance, and security.

AI is no longer a tech team experiment. It's in customer support, sales, marketing, legal, HR, and finance. Every department now has a use case—and every department is signing up for different tools, using different providers, with no unified oversight.

The result? Shadow AI sprawl, unpredictable costs, and zero visibility into what data is flowing where.

The Proxy Problem

Simple LLM proxies solve part of this: cost tracking and provider routing. They let you switch between OpenAI and Anthropic, monitor spend, and avoid rate limits.

But proxies are point solutions. They don't answer the questions that keep IT and finance leaders up at night:

- Who accessed what model, and what did they send it?

- Why did our AI spend triple last month?

- Can we prove to auditors that customer data wasn't exposed?

- What happens when our primary provider has an outage?

Enterprises don't need another tool to manage. They need a single platform that handles AI access, security, cost management, and compliance in one place.

What a Unified AI Gateway Delivers

Centralized Access Control

One platform where every employee—from the marketing intern to the CFO—gets appropriate AI access. Role-based permissions (Owner, Admin, Developer) that map to how teams actually work. No more scattered API keys or untracked ChatGPT Plus subscriptions.

Complete Cost Visibility

Real-time spend broken down by team, project, and individual. Set budgets, get alerts, and enable internal chargebacks. One company reduced their AI spend by 40% simply by seeing which teams were over-prompting.

Audit Trails for Compliance

Every AI interaction logged—who prompted what, when, which model, and what it cost. When the auditor asks "what data touched AI systems last quarter?", you have the answer in seconds.

Automatic Failover and Optimization

Multi-provider routing means you're never locked into one vendor. When OpenAI has latency issues, requests automatically route to Anthropic or Google. Smart routing optimizes for cost, speed, or reliability based on your priorities.

Enterprise-Grade Security

This is where self-hosting becomes critical. A gateway deployed in your own infrastructure means prompts containing customer PII, contracts, and internal strategy never leave your network. You control data retention. Provider API keys stay in your systems. Compliance audits become straightforward: here's the server, here's the data, here's who accessed it.

The Business Case

For a 200-person company spending $15K/month on AI:

- Cost savings: 20-40% reduction through usage visibility and intelligent routing

- Risk reduction: Eliminated shadow AI and data exposure from uncontrolled tools

- Operational efficiency: One platform instead of managing 5+ vendor relationships

- Audit readiness: Compliance documentation that would take weeks now takes minutes

Making the Transition

The gap between "let's try ChatGPT" and "AI is critical infrastructure" is smaller than you think. Modern AI gateways like LLM Gateway deploy via Docker, integrate with existing identity providers, and offer OpenAI-compatible APIs that work with your current tools.

The question isn't whether to standardize AI access. It's whether you'll do it before the next security incident or budget surprise forces your hand.

For enterprises, the future of AI isn't about choosing the right model. It's about controlling how AI flows through your organization—securely, accountably, and on your own terms.

Ready to take control of your organization's AI? Get started with LLM Gateway